About

I am a Computer Science senior student in the Cognitive Learning for Vision and Robotics Lab (CLVR) at USC, advised by Professor Joseph J. Lim. I also work under the guidance of Dr. Jim Fan at NVIDIA Research.

My research aims at developing intelligent robots that learn, act and improve by perceiving and interacting with the complex and dynamic world. I seek methods that enable robots to memorize and imagine, the core capabilities of human intelligence. My research relies on offline data and trains robots to absorb information from explicit demonstrations, general observation, and its own experimentation. Ultimately, I hope that robots and machines can equip with high-level cognitive skills to assist people in scientific discovery, education, and the pursuit of enabling an interplanetary humanity on Mars and beyond.

Research

Within deep reinforcement learning and robotics, I am particularly intrested in:

Model-based learning: build internal world models of the embodied self and the world to develop a general understanding and improve sample-efficiency in learning through planning.

Hierarchical / Skill-based learning: enable long-horizon task learning through temporal abstraction and guided exploration.

Embodied AI: currently large models mostly manipulate words and images; how to ground them to facilitate learning from direct experiences of the world?

A sample of other topics that I’m also very curious about: generative models, meta-learning, external memory.

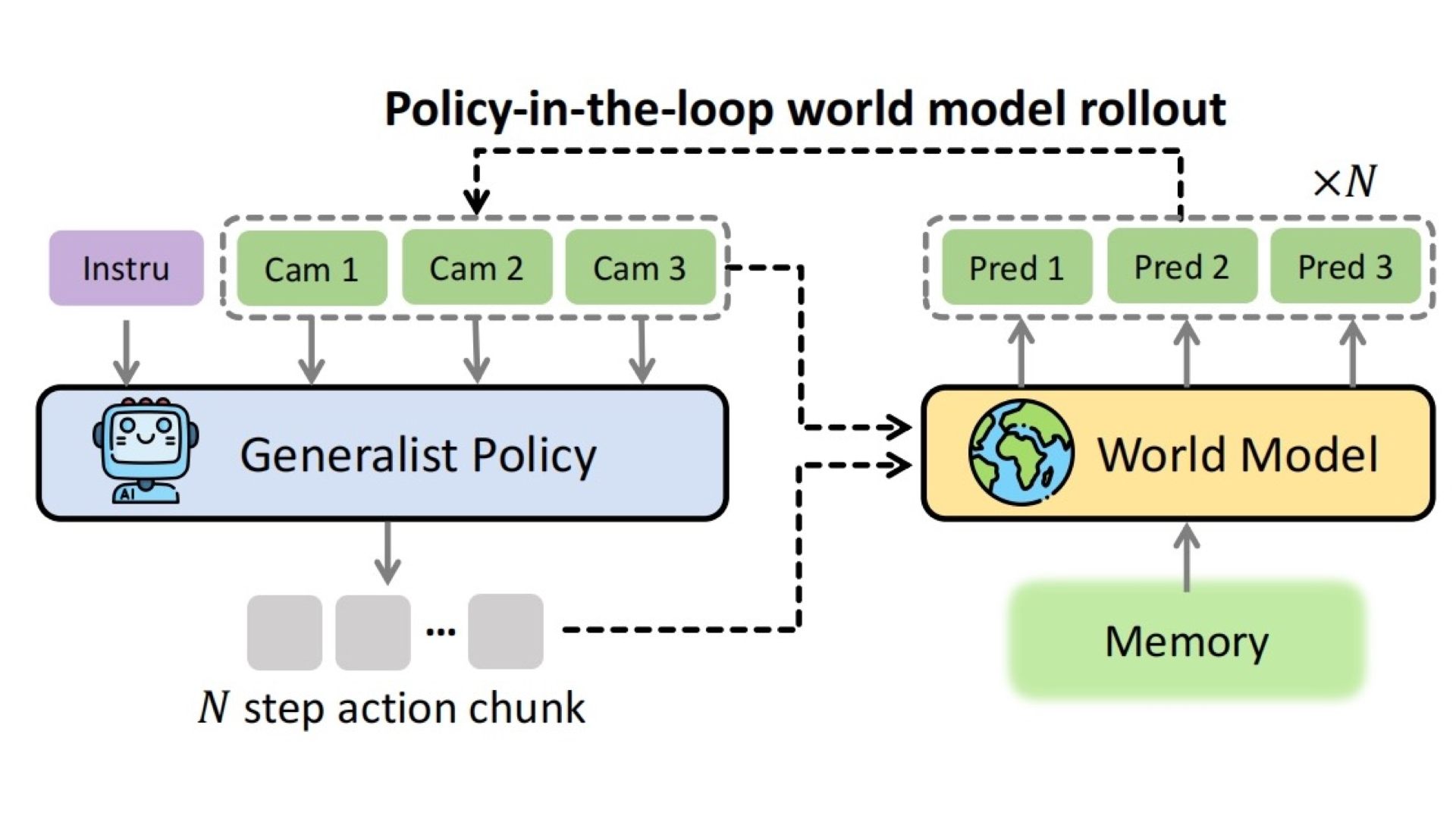

Ctrl-World: A Controllable Generative World Model for Robot Manipulation

Yanjiang Guo*, Lucy Xiaoyang Shi*, Jianyu Chen, Chelsea Finn

in submission, 2025

code /

A controllable world model that can evaluate and improve VLA policies.

Hi Robot: Open-Ended Instruction Following with Hierarchical Vision-Language-Action Models

Lucy Xiaoyang Shi, Brian Ichter, Michael Equi, Liyiming Ke, Karl Pertsch, Quan Vuong, James Tanner, Anna Walling, Haohuan Wang, Niccolo Fusai, Adrian Li-Bell, Danny Driess, Lachy Groom, Sergey Levine, Chelsea Finn

International Conference on Machine Learning (ICML), 2025

We are teaching robots to listen and think harder.

Yell At Your Robot: Improving On-the-Fly from Language Corrections

Lucy Xiaoyang Shi, Zheyuan Hu, Tony Z. Zhao, Archit Sharma, Karl Pertsch, Jianlan Luo, Sergey Levine, Chelsea Finn

Robotics: Science and Systems (RSS), 2024

code /

YAY Robot leverages verbal corrections to enable on-the-fly adaptation and continuous policy improvement on complex long-horizon tasks.

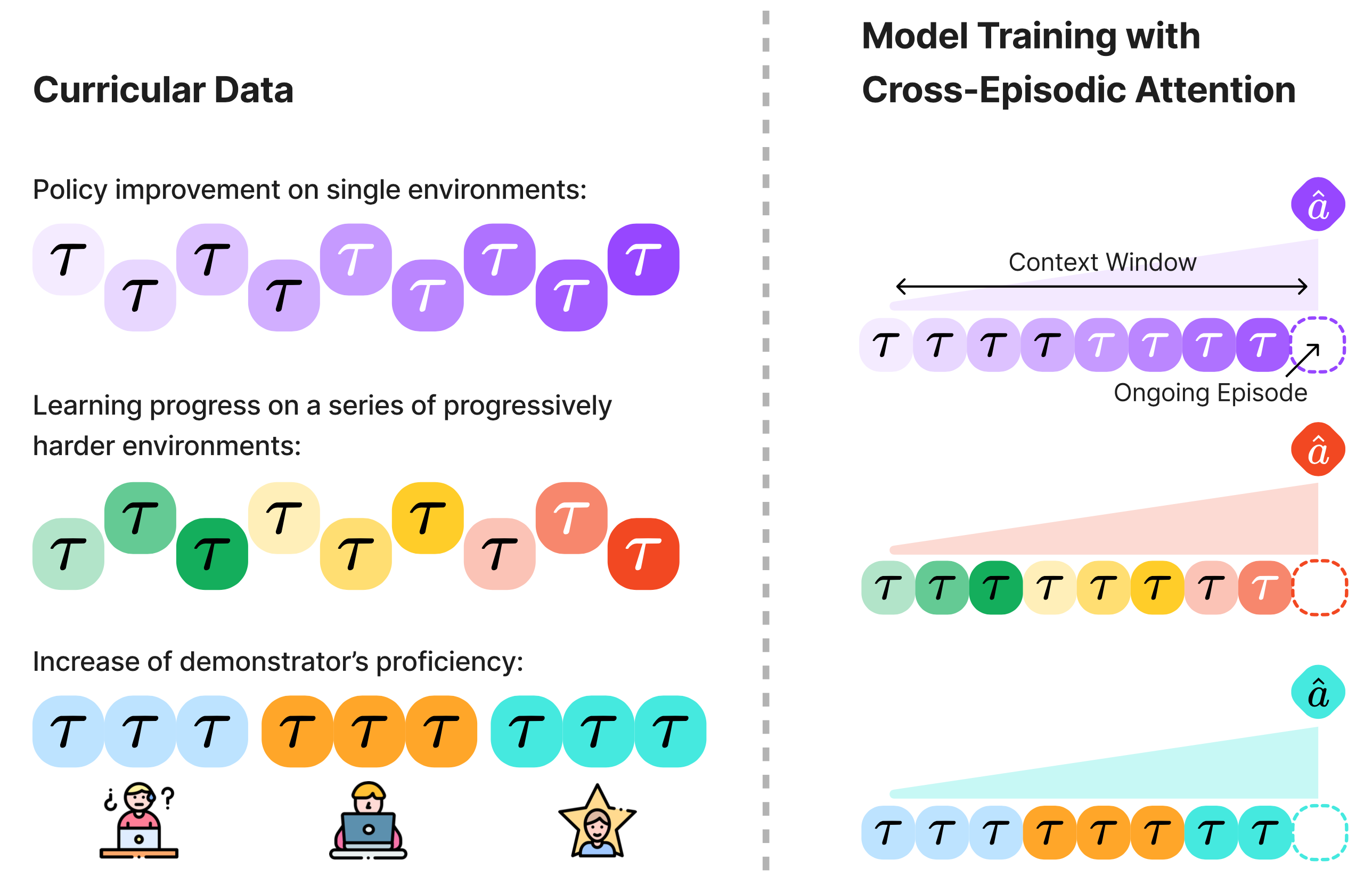

Cross-Episodic Curriculum for Transformer Agents

Lucy Xiaoyang Shi*, Yunfan Jiang*, Jake Grigsby, Linxi 'Jim' Fan†, Yuke Zhu†

Neural Information Processing Systems (NeurIPS), 2023

code /

CEC enhances Transformer agents’ learning efficiency and generalization by structuring cross-episodic experiences in-context.

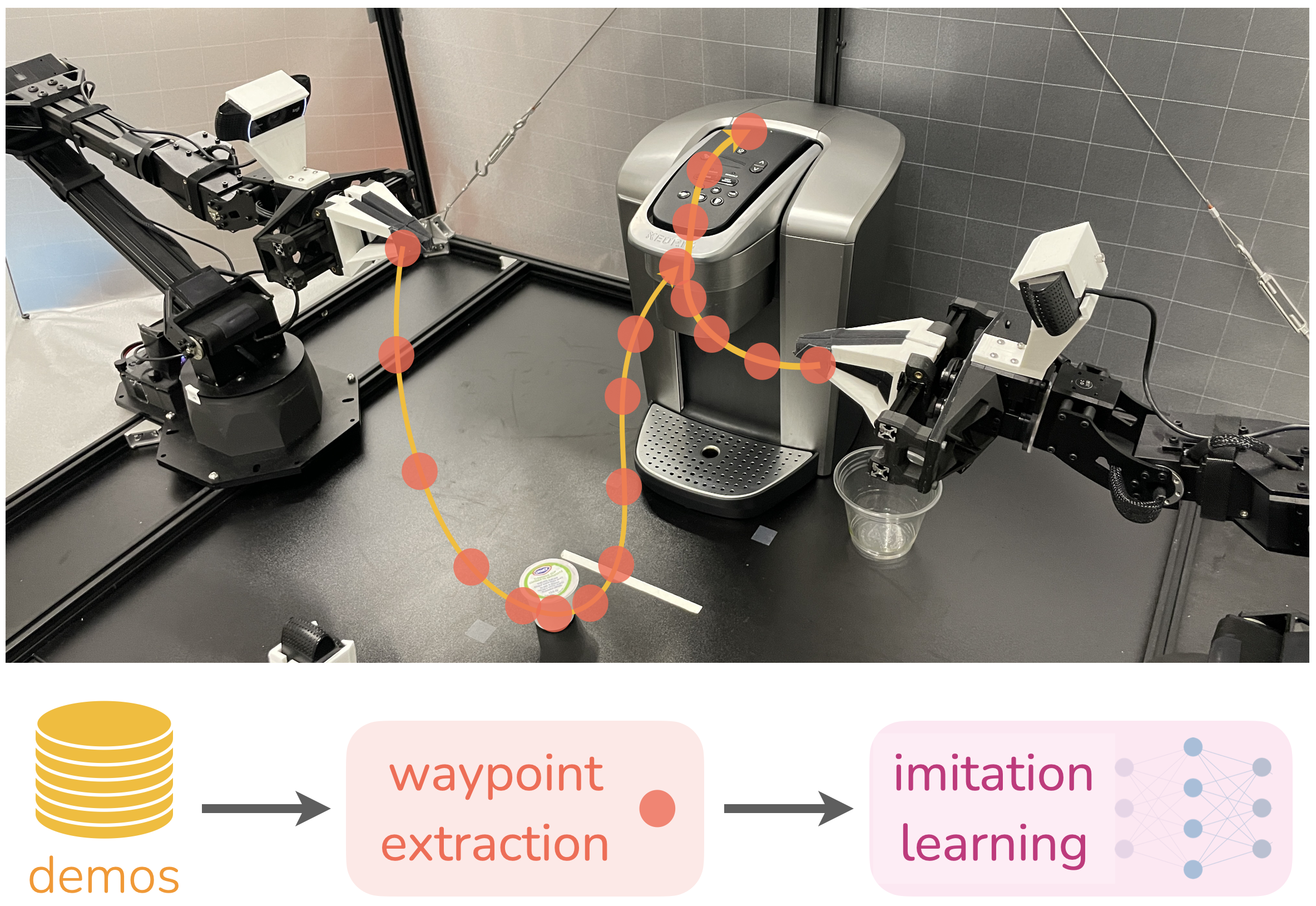

Waypoint-Based Imitation Learning for Robotic Manipulation

Lucy Xiaoyang Shi*, Archit Sharma*, Tony Z. Zhao, Chelsea Finn

Conference on Robot Learning (CoRL), 2023

code /

We propose an automatic method for extracting waypoints from demonstrations for performant imitation learning.

Skill-based Model-based Reinforcement Learning

Lucy Xiaoyang Shi, Joseph J. Lim, Youngwoon Lee

Conference on Robot Learning (CoRL), 2022

code /

We devise a method that enables model-based RL on long-horizon, sparse-reward tasks, allowing us to learn with 5x less samples.